This page appeared in The Hindu’s e-paper today.

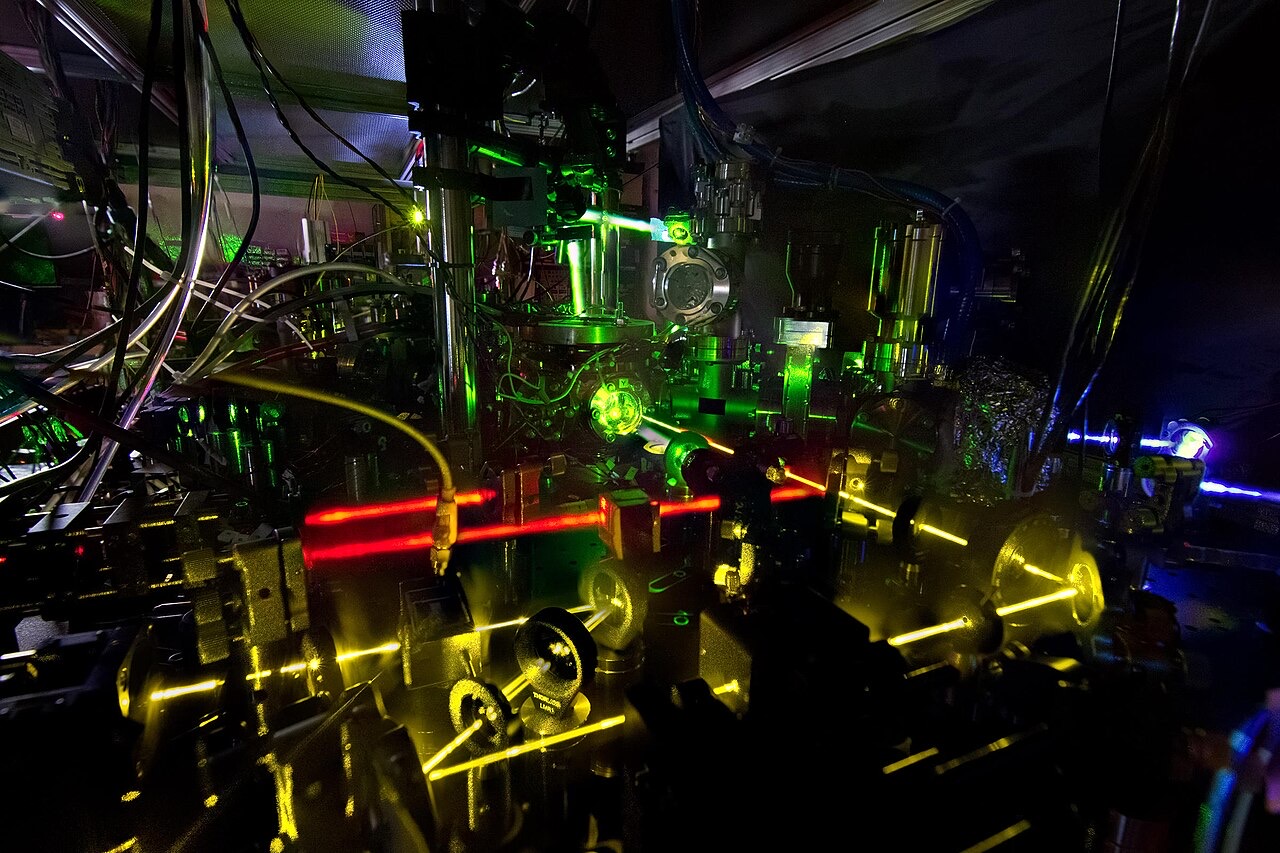

I wrote the lead article, about why scientists are so interested in an elementary particle called the top quark. Long story short: the top quark is the heaviest elementary particle, and because all elementary particles get their masses by interacting with Higgs bosons, the top quark’s interaction is the strongest. This has piqued physicists’ interest because the Higgs boson’s own mass is peculiar: it’s more than expected and at the same time poised on the brink of a threshold beyond which our universe as we know it wouldn’t exist. To explain this brinkmanship, physicists are intently studying the top quark, including measuring its mass with more and more precision.

It’s all so fascinating. But I’m well aware that not many people are interested in this stuff. I wish they were and my reasons follow.

There exists a sufficiently healthy journalism of particle physics today. Most of it happens in Europe and the US, (i) where famous particle physics experiments are located, (ii) where there already exists an industry of good-quality science journalism, and (iii) where there are countries and/or governments that actually have the human resources, funds, and political will to fund the experiments (in many other places, including India, these resources don’t exist, rendering the matter of people contending with these experiments moot).

In this post, I’m using particle physics as itself as well as as a surrogate for other reputedly esoteric fields of study.

This journalism can be divided into three broad types: those with people, those concerned with spin-offs, and those without people. ‘Those with people’ refers to narratives about the theoretical and experimental physicists, engineers, allied staff, and administrators who support work on particle physics, their needs, challenges, and aspirations.

The meaning of ‘those concerned with spin-offs’ is obvious: these articles attempt to justify the money governments spend on particle physics projects by appealing to the technologies scientists develop in the course of particle-physics work. I’ve always found these to be apologist narratives erecting a bad expectation: that we shouldn’t undertake these projects if they don’t also produce valuable spin-off technologies. I suspect most particle physics experiments don’t because they are much smaller than the behemoth Large Hadron Collider and its ilk, which require more innovation across diverse fields.

‘Those without people’ are the rarest of the lot — narratives that focus on some finding or discussion in the particle physics community that is relatively unconcerned with the human experience of the natural universe (setting aside the philosophical point that the non-human details are being recounted by human narrators). These stories are about the material constituents of reality as we know it.

When I say I wish more people were interested in particle physics today, I wish they were interested in all these narratives, yet more so in narratives that aren’t centred on people.

Now, why should they be concerned? This is a difficult question to answer.

I’m concerned because I’m fascinated with the things around us we don’t fully understand but are trying to. It’s a way of exploring the unknown, of going on an adventure. There are many, many things in this world that people can be curious about. It’s possible there are more such things than there are people (again, setting aside the philosophical bases of these claims). But particle physics and some other areas — united by the extent to which they are written off as being esoteric — suffer from more than not having their fair share of patrons in the general (non-academic) population. Many people actively shun them, lose focus when reading about them, and at the same time do little to muster focus back. It has even become okay for them to say they understood nothing of some (well-articulated) article and not expect to have their statement judged adversely.

I understand why narratives with people in them are easier to understand, to connect with, but none of the implicated psychological, biological, and anthropological mechanisms also encourage us to reject narratives and experiences without people. In other words, there may have been evolutionary advantages to finding out about other people but there have been no disadvantages attached to engaging with stories that aren’t about other people.

Next, I have met more than my fair share of people that flinched away from the suggestion of mathematics or physics, even when someone offered to guide them through understanding these topics. I’m also aware researchers have documented this tendency and are attempting to distil insights that could help improve the teaching and the communication of these subjects. Personally I don’t know how to deal with these people because I don’t know the shape of the barrier in their minds I need to surmount. I may be trying to vault over a high wall by simplifying a concept to its barest features when in fact the barrier is a low-walled labyrinth.

Third and last, let me do unto this post what I’m asking of people everywhere, and look past the people: why should we be interested in particle physics? It has nothing to offer for our day-to-day experiences. Its findings can seem totally self-absorbed, supporting researchers and their careers, helping them win famous but otherwise generally unattainable awards, and sustaining discoveries into which political leaders and government officials occasionally dip their beaks to claim labels like “scientific superpower”. But the mistake here is not the existence of particle physics itself so much as the people-centric lens through which we insist it must be seen. It’s not that we should be interested in particle physics; it’s that we can.

Particle physics exists because some people are interested in it. If you are unhappy that our government spends too much on it, let’s talk about our national R&D expenditure priorities and what the practice, and practitioners, of particle physics can do to support other research pursuits and give back to various constituencies. The pursuit of one’s interests can’t be the problem (within reasonable limits, of course).

More importantly, being interested in particle physics and in fact many other branches of science shouldn’t have to be justified at every turn for three reasons: reality isn’t restricted to people, people are shaped by their realities, and our destiny as humans. On the first two counts: when we choose to restrict ourselves to our lives and our welfare, we also choose to never learn about what, say, gravitational waves, dark matter, and nucleosynthesis are (unless these terms turn up in an exam we need to pass). Yet all these things played a part in bringing about the existence of Earth and its suitability for particular forms of life, and among people particular ways of life.

The rocks and metals that gave rise to waves of human civilisation were created in the bellies of stars. We needed to know our own star as well as we do — which still isn’t much — to help build machines that can use its energy to supply electric power. Countries and cultures that support the education and employment of people who made it a point to learn the underlying science thus come out on top. Knowing different things is a way to future-proof ourselves.

Further, climate change is evidence humans are a planetary species, and soon it will be interplanetary. Our own migrations will force us to understand, eventually intuitively, the peculiarities of gravity, the vagaries of space, and (what is today called) mathematical physics. But even before such compulsions arise, it remains what we know is what we needn’t be afraid of, or at least know how to be afraid of. 😀

Just as well, learning, knowing, and understanding the physical universe is the foundation we need to imagine (or reimagine) futures better than the ones ordained for us by our myopic leaders. In this context, I recommend Shreya Dasgupta’s ‘Imagined Tomorrow’ podcast series, where she considers hypothetical future Indias in which medicines are tailor-made for individuals, where antibiotics don’t exist because they’re not required, where clean air is only available to breathe inside city-sized domes, and where courtrooms use AI — and the paths we can take to get there.

Similarly, with particle physics in mind, we could also consider cheap access to quantum computers, lasers that remove infections from flesh and tumours from tissue in a jiffy, and communications satellites that reduce bandwidth costs so much that we can take virtual education, telemedicine, and remote surgeries for granted. I’m not talking about these technologies as spin-offs, to be clear; I mean technologies born of our knowledge of particle (and other) physics.

At the biggest scale, of course, understanding the way nature works is how we can understand the ways in which the universe’s physical reality can or can’t affect us, in turn leading the way to understanding ourselves better and helping us shape more meaningful aspirations for our species. The more well-informed any decision is, the more rational it will be. Granted, the rationality of most of our decisions is currently only tenuously informed by particle physics, but consider if the inverse could be true: what decisions are we not making as well as we could if we cast our epistemic nets wider, including physics, biology, mathematics, etc.?

Consider, even beyond all this, the awe astronauts who have gone to Earth orbit and beyond have reported experiencing when they first saw our planet from space, and the immeasurable loneliness surrounding it. There are problems with pronouncements that we should be united in all our efforts on Earth because, from space, we are all we have (especially when the country to which most of these astronauts belong condones a genocide). Fortunately, that awe is not the preserve of spacefaring astronauts. The moment we understood the laws of physics and the elementary constituents of our universe, we (at least the atheists among us) may have realised there is no centre of the universe. In fact, there is everything except a centre. How grateful I am for that. For added measure, awe is also good for the mind.

It might seem like a terrible cliché to quote Oscar Wilde here — “We are all in the gutter, but some of us are looking at the stars” — but it’s a cliché precisely because we have often wanted to be able to dream, to have the simple act of such dreaming contain all the profundity we know we squander when we live petty, uncurious lives. Then again, space is not simply an escape from the traps of human foibles. Explorations of the great unknown that includes the cosmos, the subatomic realm, quantum phenomena, dark energy, and so on are part of our destiny because they are the least like us. They show us what else is out there, and thus what else is possible.

If you’re not interested in particle physics, that’s fine. But remember that you can be.

Featured image: An example of simulated data as might be observed at a particle detector on the Large Hadron Collider. Here, following a collision of two protons, a Higgs boson is produced that decays into two jets of hadrons and two electrons. The lines represent the possible paths of particles produced by the proton-proton collision in the detector while the energy these particles deposit is shown in blue. Caption and credit: Lucas Taylor/CERN, CC BY-SA 3.0.