For all their flaws, the science Nobel Prizes – at the time they’re announced, in the first week of October every year – provide a good opportunity to learn about some obscure part of the scientific endeavour with far-reaching consequences for humankind. This year, for example, we learnt about attosecond physics, quantum dots, and in–vitro transcribed mRNA. The respective laureates had roots in Austria, France, Hungary, Russia, Tunisia, and the U.S. Among the many readers that consume articles about these individuals’ work with any zest, the science Nobel Prizes’ announcement is also occasion for a recurring question: how come no scientist from India – such a large country, of so many people with diverse skills, and such heavy investments in research – has won a prize? I thought I’d jot down my version of the answer in this post. There are four factors:

1. Missing the forest for the trees – To believe that there’s a legitimate question in “why has no Indian won a science Nobel Prize of late?” is to suggest that we don’t consider what we read in the news everyday to be connected to our scientific enterprise. Pseudoscience and misinformation are almost everywhere you look. We’re underfunding education, most schools are short-staffed, and teachers are underpaid. R&D allocations by the national government have stagnated. Academic freedom is often stifled in the name of “national interest”. Students and teachers from the so-called ‘non-upper-castes’ are harassed even in higher education centres. Procedural inefficiencies and red tape constantly delay funding to young scholars. Pettiness and politicking rule many universities’ roosts. There are ill-conceived limits on the use, import, and export of biological specimens (and uncertainty about the state’s attitude to it). Political leaders frequently mock scientific literacy. In this milieu, it’s as much about having the resources to do good science as being able to prioritise science.

2. Historical backlog – This year’s science Nobel Prizes have been awarded for work that was conducted in the 1980s and 1990s. This is partly because the winning work has to have demonstrated that it’s of widespread benefit, which takes time (the medicine prize was a notable exception this year because the pandemic accelerated the work’s adoption), and partly because each prize most often – but not always – recognises one particular topic. Given that there are several thousand instances of excellent scientific work, it’s possible, on paper, for the Nobel Prizes to spend several decades awarding scientific work conducted in the 20th century alone. Recall that this was a boom time for science, with the advent of quantum mechanics and the theories of relativity, considerable war-time investment and government support, followed by revolutions in electronics, materials science, spaceflight, genetics, and pharmaceuticals, and then came the internet. It was also the time when India was finding its feet, especially until economic liberalisation in the early 1990s.

3. Lack of visibility of research – Visibility is a unifying theme of the Nobel laureates and their work. That is, you need to do good work as well as be seen to be doing that work. If you come up with a great idea but publish it in an obscure journal with no international readership, you will lose out to someone who came up with the same idea but later, and published it in one of the most-read journals in the world. Scientists don’t willingly opt for obscure journals, of course: publishing in better-read journals isn’t easy because you’re competing with other papers for space, the journals’ editors often have a preference for more sensational work (or sensationalisable work, such as a paper co-authored by an older Nobel laureate; see here), and publishing fees can be prohibitively high. The story of Meghnad Saha, who was nominated for a Nobel Prize but didn’t win, offers an archetypal example. How journals have affected the composition of the scientific literature is a vast and therefore separate topic, but in short, they’ve played a big part to skew it in favour of some kinds of results over others – even if they’re all equally valuable as scientific contributions – and to favour authors from some parts of the world over others. Journals’ biases sit on top of those of universities and research groups.

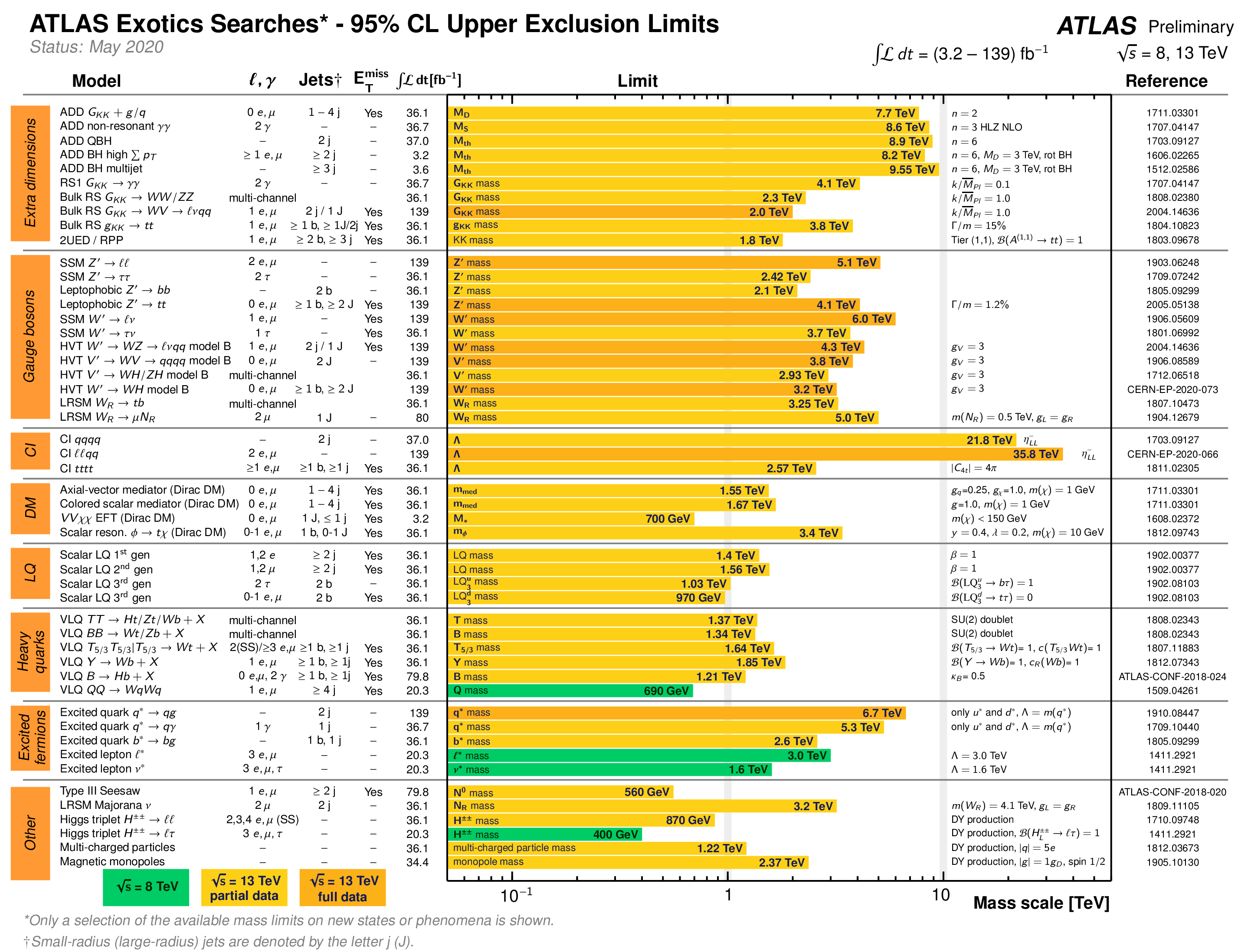

4. Award fixation – The Nobel Prizes aren’t interested in interrogating the histories and social circumstances in which science (that it considers to be prize-worthy) happens; they simply fete what is. It’s we who must grapple with the consequences of our histories of science, particularly science’s relationship with colonialism, and make reparations. Fixating on winning a science Nobel Prize could also lock our research enterprise – and the public perception of that enterprise – into a paradigm that prefers individual winners. The large international collaboration is a good example: When physicists working with the LHC found the Higgs boson in 2012, two physicists who predicted the particle’s existence in 1964 won the corresponding Nobel Prize. Similarly, when scientists at the LIGO detectors in the US first observed gravitational waves in 2016, three physicists who conceived of LIGO in the 1970s won the prize. Yet the LHC and the LIGOs, and other similar instruments continue to make important contributions to science – directly, by probing reality, and indirectly by supporting research that can be adapted for other fields. One 2007 paper also found that Nobel Prizes have been awarded to inventions only 23% of the time. Does that mean we should just focus on discoveries? That’s a silly way of doing science.

The Nobel Prizes began as the testament of a wealthy Swedish man who was worried about his legacy. He started a foundation that put together a committee to select winners of some prizes every year, with some cash from the man’s considerable fortunes. Over the years, the committee made a habit of looking for and selecting some of the greatest accomplishments of science (but not all), so much so that the laureates’ standing in the scientific community created an aspiration to win the prize. Many prizes begin like the Nobel Prizes did but become irrelevant because they don’t pay enough attention to the relationship between the laureate-selecting process and the prize’s public reputation (note that the Nobel Prizes acquired their reputation in a different era). The Infosys Prize has elevated itself in this way whereas the Indian Science Congress’s prize has undermined itself. India or any Indian for that matter can institute an award that chooses its winners more carefully, and gives them lots of money (which I’m opposed to vis-à-vis senior scientists) to draw popular attention.

There are many reasons an Indian hasn’t won a science Nobel Prize in a while but it’s not the only prize worth winning. Let’s aspire to other, even better, ones.